💡 Personal Projects

Vision-Action Transformers for basic assembly tasks (In the making)

This ongoing project focuses on generating high-quality, scalable training data in Isaac Gym to train Action Chunking Transformers for robotic assembly. The goal is to move beyond imitation learning by simulating diverse grasp and manipulation behaviors, then use this data to train a ACT. Future steps involve expanding to vision-action-language models and deploying the full pipeline back into Isaac Gym for closed-loop robotic control.

Features :

- Synthetic trajectory and vision data generation using Isaac Gym with camera-mounted robotic arms.

- ...

Tech Stack :

Traffic Sign Recognition with YOLOv8

Developed as part of a university project at Heilbronn University, this real-time traffic sign recognition system uses YOLOv8n for fast and robust detection. It handles challenging conditions like occlusion, poor lighting, and complex backgrounds by leveraging a custom synthetic dataset, multi-stage classification, and real-time frame filtering.

Features :

- Real-time traffic sign detection using YOLOv8n (Nano version for speed and efficiency).

- Custom synthetic dataset generation with COCO backgrounds and heavy augmentation.

- Two-stage classification specifically for speed limit signs.

- Frame caching logic to reduce false positives during inference.

- Visualization via UI overlay: persistent speed sign display + rotating multi-sign view.

- Trained on 3000+ synthetic images and validated with GTSDB and dashcam footage.

- Fast inference: ~0.06–0.09 seconds/frame.

Tech Stack :

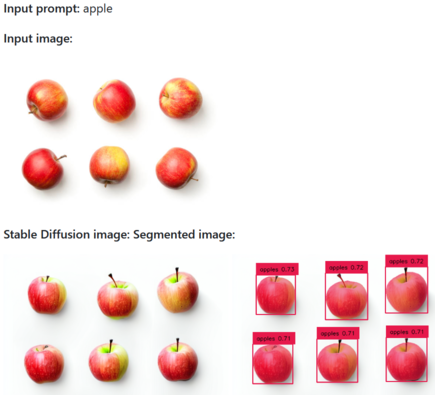

Stabled Grounding SAM

Stabled Grounding SAM is a powerful tool for generating synthetic datasets with pre-segmented images. It combines Stable Diffusion, Grounding DINO, and Segment Anything to create annotated datasets from just a single input image and a label file.

Features :

- Generates synthetic images from a single input image using Stable Diffusion's `img2img`.

- Automatically detects and labels objects using Grounding DINO.

- Refines segmentations using Meta’s Segment Anything model.

- Outputs datasets in YOLO format for easy training integration.

- Great for quickly building vision datasets without manual labeling.